How to Use the RICE Framework for Effective Product Management Prioritization

Introduction

In the fast-paced world of product management, prioritization is a constant challenge. With endless feature requests, user needs, and business goals, how do you decide where to focus?

Enter the RICE framework—a simple yet powerful tool to help you prioritize work systematically and confidently. By evaluating projects based on Reach, Impact, Confidence, and Effort, RICE provides clarity and ensures you’re making data-driven decisions.

But how exactly does RICE work, and how can you implement it in your daily workflow?

In this article, we’ll break down the framework, provide practical examples, and show you how to integrate it into your routine.

RICE Framework: Prioritize Projects with Confidence

The RICE scoring framework is a robust tool for product managers striving to make informed, objective prioritization decisions. By weighing each project against four key criteria—Reach, Impact, Confidence, and Effort—RICE helps eliminate biases and guesswork, allowing you to focus on high-value opportunities.

Whether you’re evaluating feature requests or planning quarterly goals, RICE offers a structured approach to make prioritization smoother and more effective. Let’s explore each part of this framework, look at how to calculate RICE scores, and discuss ways to apply it in your day-to-day product management work.

Reach: Estimating the Scope of Your Project

Reach is the metric that assesses how many users or customers a project could potentially affect within a specific time period. Estimating reach involves thinking through the scale of the impact—whether that’s the number of unique users in a month or the number of tasks completed.

Example Calculation: If a feature update might impact 5,000 users monthly, you’d assign a reach value of 5,000.

How to Calculate Reach

The reach calculation often requires data from analytics tools or historical user data. If exact numbers aren’t available, use estimates based on similar past initiatives. It’s important to remain realistic with reach numbers, as overestimation can skew the overall score.

Key Point: Aligning Reach with Goals

Ensure that the reach aligns with your strategic goals. A high reach is only valuable if it’s impacting the right users or achieving meaningful outcomes for the business.

Impact: Determining the Value to Users

Impact reflects the potential value or benefit that the project could deliver to each user it affects. Unlike reach, which is quantitative, impact is often subjective and rated on a scale (e.g., 0.25 for minimal impact, 1 for medium, 2 for significant, and 3 for massive impact).

Example Calculation: If implementing a new onboarding flow could significantly increase user retention, it might earn an impact score of 2.

Rating Impact

Assess impact based on how deeply the project will influence user experience, engagement, or other critical metrics. When in doubt, gather input from stakeholders or use historical data to inform impact assumptions.

Key Point: Consistency in Impact Scoring

Maintaining consistency in impact scoring is crucial for fair comparisons. Establish a scale with examples to make future impact ratings more reliable across various projects.

Confidence: Reducing Guesswork in Scoring

Confidence measures how certain you are about your reach, impact, and effort estimates. Assign a confidence score between 0-100%, with higher confidence indicating greater certainty in your calculations.

Example Calculation: If the data backing your impact score is strong but reach is estimated, you might set a confidence level of 80%.

Evaluating Confidence

Confidence is particularly important for projects with ambiguous data. Use it to adjust scores and avoid overcommitting to uncertain initiatives. The confidence metric is a safeguard against investing resources based on weak assumptions.

Key Point: Improving Confidence Accuracy

If confidence is low, consider running a small experiment or collecting additional data to boost confidence before making a major investment. This can save time and resources in the long run.

Effort: Balancing Complexity with Value

Effort refers to the total work required to complete the project, measured in person-hours, days, or sprints. A project’s effort score inversely affects its priority; less effort generally means a higher priority when other criteria are equal.

Example Calculation: If a feature will take a full sprint of two weeks for a small team to implement, you’d score effort as 2.

Calculating Effort

Estimate effort based on previous project timelines and available resources. Involve the team to ensure accuracy, as underestimating effort can lead to rushed work and lower-quality results.

Key Point: Cross-Functional Collaboration on Effort Estimation

Involve engineering, design, and other relevant teams to validate effort estimates. This not only improves accuracy but also builds cross-functional buy-in for prioritization decisions.

Implementing RICE in Daily Product Management

RICE scoring can become an essential part of your prioritization process, especially during roadmap planning or sprint prep. Here’s how to incorporate RICE scoring effectively:

-

Set Regular Review Sessions: Implement RICE scoring during roadmap reviews or sprint planning sessions to assess new projects consistently. Establishing a routine around scoring can streamline decision-making and increase transparency with stakeholders.

-

Incorporate RICE into Team Tools: Many project management tools allow custom fields—consider adding RICE criteria in tools like Jira or Trello for easy reference. Documenting RICE scores within these platforms helps keep everyone on the same page.

-

Keep Adjusting Scores: Product priorities shift, so revisit scores periodically, especially if new data emerges. Adjusting RICE scores can ensure your roadmap stays aligned with real-world conditions and strategic goals.

Implementing RICE Scoring with a Table

Implementing the RICE scoring framework is straightforward when using a table to organize and evaluate your projects.

Whether you use software like Excel, Google Sheets, or a simple hand-drawn chart, the process involves setting up a structured format to calculate and compare scores.

Why tables work well for RICE scoring?

Tables are a flexible and visual way to organize RICE scores. They can easily be customized to fit your workflow, shared with team members, and scaled up as needed. Start simple and expand as your needs grow!

Setting Up Your RICE Scoring Table

-

Define the Columns: Your table should include the following columns:

- Feature Name: The name or description of the feature or idea being evaluated.

- Reach: The estimated number of users the project will impact.

- Impact: The degree to which the project will affect each user (on a 1-5 scale).

- Confidence: Your level of certainty about the estimates for Reach, Impact, and Effort (on a percentage scale, e.g., 80% or 0.8).

- Effort: The total time or resources required to complete the project (measured in person-months or other units).

- RICE Score: The calculated priority score for the feature.

-

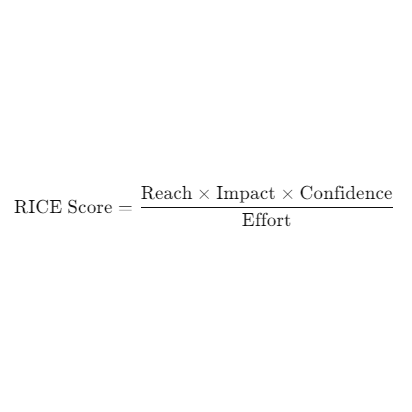

Create a Formula for the RICE Score: Use the formula:

= (B2 * C2 * D2) / E2 -

Include a Notes Column: Add a column for notes or additional context about each project.

Example RICE Scoring Table

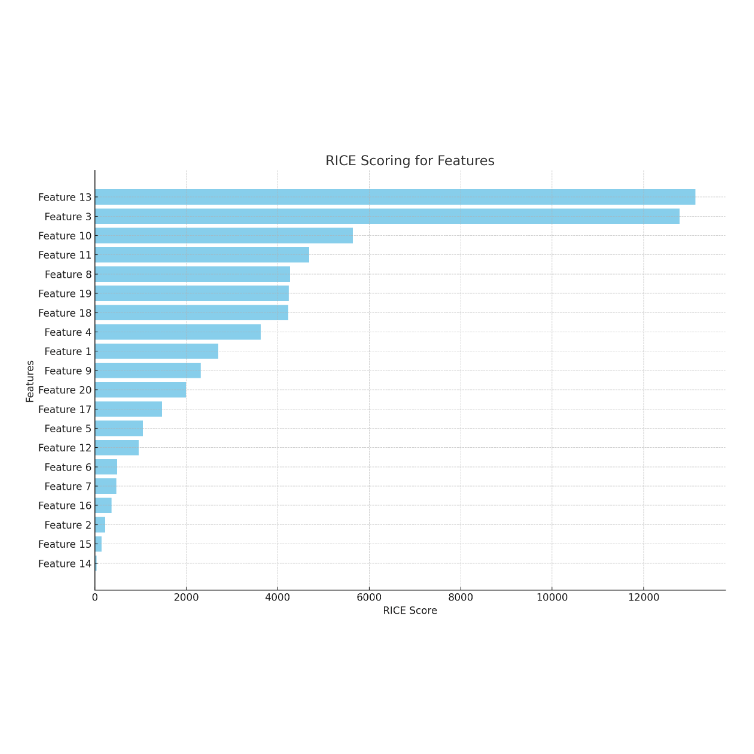

Here's a bar chart displaying the RICE scores for 20 different features. Each feature is ranked by its calculated RICE score, with the highest-scoring features appearing at the top.

This visual can help quickly identify which projects or features should be prioritized based on their impact, reach, confidence, and effort.

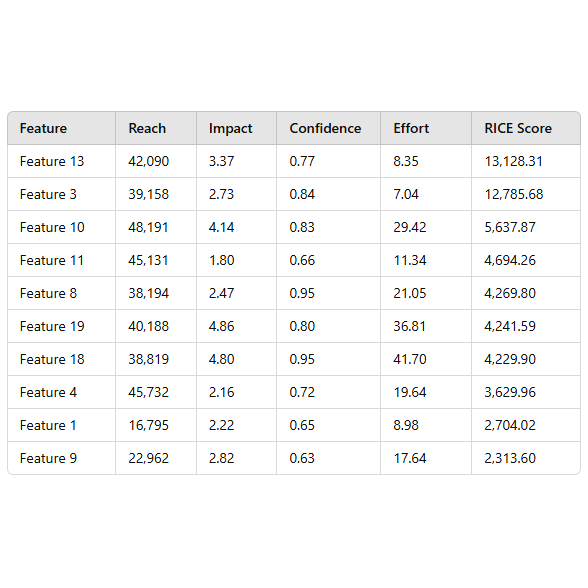

Here’s the RICE scoring table that corresponds to the bar chart displaying the RICE scores:

Here’s a template table (*.csv) to guide your setup:

Feature,Reach,Impact,Confidence,Effort,RICE Score

Feature 13,42090,3.37,0.77,8.35,13128.31

Feature 3,39158,2.73,0.84,7.04,12785.68

Feature 10,48191,4.14,0.83,29.42,5637.87

Feature 11,45131,1.80,0.66,11.34,4694.26

Feature 8,38194,2.47,0.95,21.05,4269.80Tips for Effective Implementation

Use Consistent Units: Ensure Reach and Effort are measured consistently (e.g., users impacted or hours spent).

Set Clear Scales: Define a standard scale for Impact (e.g., 1 = minimal, 5 = massive) and Confidence (e.g., percentages).

Collaborate: Discuss scoring with your team to align on estimates and reduce bias.

Sort by RICE Score: Once you’ve calculated the scores, rank the projects from highest to lowest to determine priorities.

Revisit Regularly: Update your table periodically as new data becomes available or priorities shift.

Conclusion

Using the RICE framework is a game-changer for product managers navigating the complexities of prioritization.

By focusing on reach, impact, confidence, and effort, you can streamline decision-making, reduce bias, and better allocate resources.

Whether planning for the next sprint or making quarterly roadmap adjustments, RICE provides a structured, data-driven approach that supports better alignment across teams.

With RICE in your toolkit, prioritizing product opportunities becomes simpler, and you can confidently focus on initiatives that drive both immediate impact and long-term growth.

This article is part of the "Frameworks for Product Managers" series.